Neural Naturalist

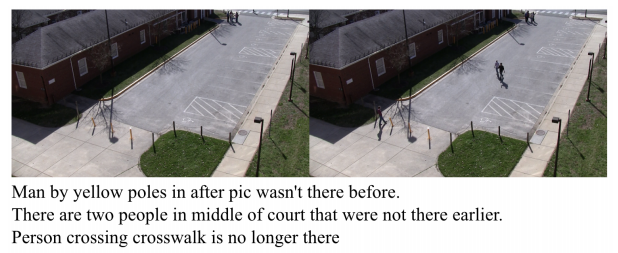

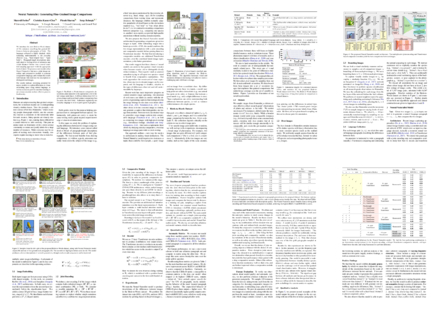

Generating Fine-Grained Image ComparisonsNeural Naturalist is our model that generates comparative language to describe fine-grained differences between pairs of related images.

A schematic of the Neural Naturalist model architecture. We find a multiplicative joint encoding (yellow; rightmost) and Transformer-based comparative module (red; bottom) yield the best comparisons between images. Top photo: jmaley (iNaturalist); bottom photo: lorospericos (iNaturalist).

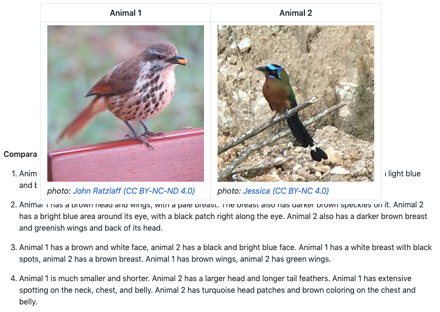

Birds-to-Words Dataset

As part of this work, we collect and release the Birds-to-Words dataset , a collection of ~41,000 sentences describing fine-grained differences between photographs of birds from iNaturalist.

The language collected is highly detailed, while remaining understandable to the everyday observer. Paragraph-length descriptions naturally adapt to varying levels of taxonomic and visual distance with the appropriate level of detail. We draw these pairs using a novel stratified sampling algorithm.

How Well Does it Work?

| Model | ROUGE-L |

|---|---|

|

CNN + LSTM

(Vinyals et al., 2015) |

0.37 |

|

CNN + Attn. + LSTM

(Xu et al., 2015) |

0.38 |

| Neural Naturalist | 0.43 |

| Human | 0.47 ± 0.01 |

Results of our model and main baselines on the test set, with text correspondence measured by rouge-l.

We compare the descriptions generated by different models to the descriptions written by humans. We use several automatic text metrics to measure the closeness of the paragraphs (including bleu-4, rouge-l, cider-d), and find rouge-l to correlate best with quality. Our model outperforms previous models according to these metrics.

animal2 has a heart shaped face , whereas animal1 has an oval face . animal2 has entirely dark eyes . animal2 has a white beak , whereas animal1 has a dark beak . animal2 has more white in its feathers .

Our human evaluation task measures whether annotators can use a description

to correctly label which is animal1 and which is animal2.

However, simply using text matching to score the models' output is not a great gauge of their quality. Ideally, we would like the descriptions they generate to allow humans to correctly distinguish between two images. We design a human evaluation that shuffles the order of the images, and asks annotators to guess which is which given a description of their differences.

| Model | Visual | Species | Genus | Family | Order | Class |

|---|---|---|---|---|---|---|

| CNN + LSTM

(Vinyals et al., 2015) |

-0.15 | 0.20 | 0.15 | 0.50 | 0.40 | 0.15 |

| CNN + Attn. + LSTM

(Xu et al., 2015) |

0.15 | 0.15 | 0.15 | -0.05 | 0.05 | 0.20 |

| Neural Naturalist | 0.10 | -0.10 | 0.35 | 0.40 | 0.45 | 0.55 |

| Human | 0.55 | 0.55 | 0.85 | 1.00 | 1.00 | 1.00 |

Human evaluation results on 120 test set samples, twenty per column. Scale: -1.00 (perfectly wrong) to 1.00 (perfectly correct). Columns are ordered left-to-right by increasing distance (visual are nearest neighbors in embedding space; the rest are taxonomic distances).

From the human evaluation, we find that Neural Naturalist exhibits competitive performance to other models at most levels of fine-grained visual distinction. And, similar to humans, it shows improvements towards more visually distinct comparisons. Human ability remains significantly higher than even the best models, which alludes to the difficulty of this task.

Why?

Expert Knowledge from Non-Experts

Eliciting natural language comparisons allows everyday people to provide detailed descriptions of adaptive granularity without any special training. Our proposed stratified sampling scheme allows us to balance coverage, relevance, comparability, and efficiency while sampling from a domain.

Even beyond the natural world, fine-grained visual categories like furniture, fashion, and cars have rich structural decomposition which yield diverse comparative language.

Photos: left: Ryan Schain (Macaulay Library); right: Myron Tay (Oriental Bird Images).

Machine Learning and Citizen Science

In a citizen science effort like iNaturalist, everyday people photograph wildlife, and the community reaches a consensus on the taxonomic label for each instance. Many species are visually similar, making them difficult for a casual observer to label correctly. This puts an undue strain on lieutenants of the citizen science community to curate and justify labels for a large number of instances.

Our work contributes one piece to the dialog of how machine learning may provide scalable aid to ongoing citizen science and biodiversity efforts.

Photos: left: miss*cee (flickr) ; mid: salticidude (iNaturalist) ; right: patsimpson2000 (iNaturalist) .

On the Shoulders of Giants

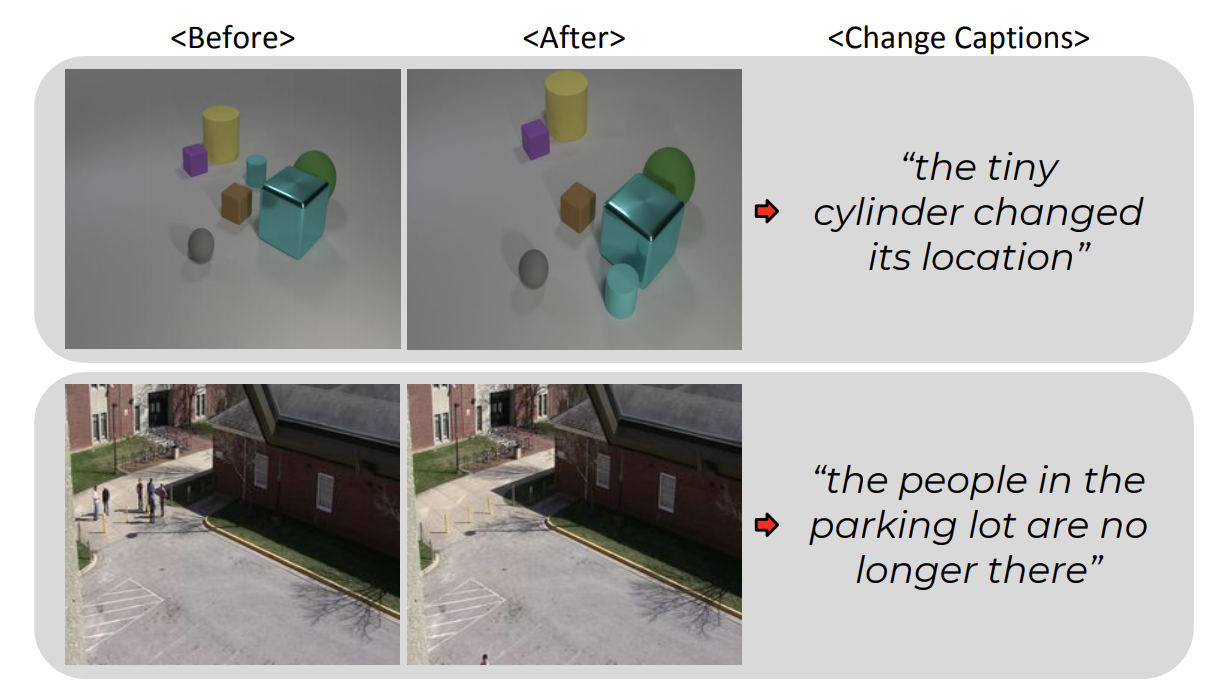

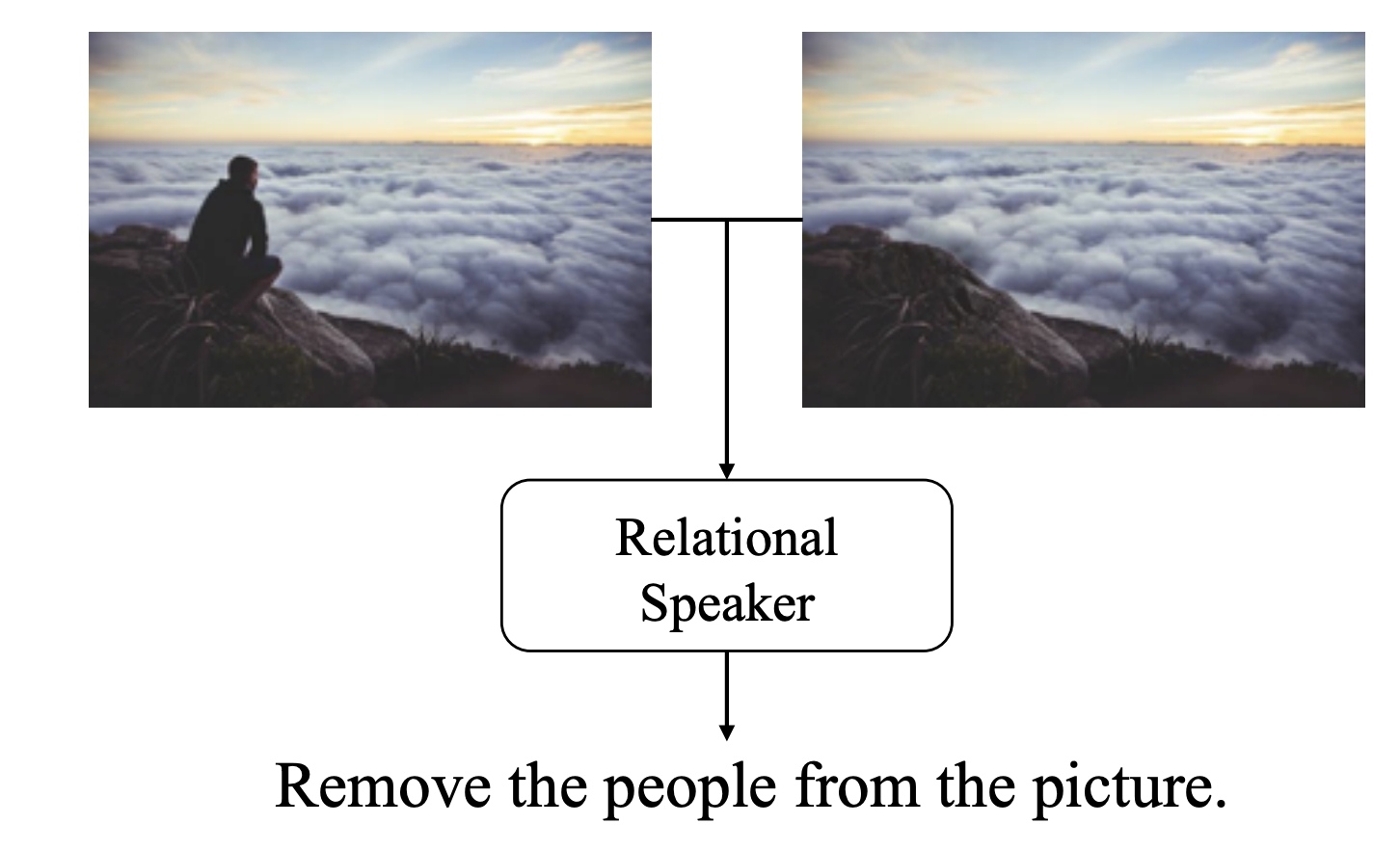

We are inspired and humbled by several pieces of closely related work. We encourage you to check them out!

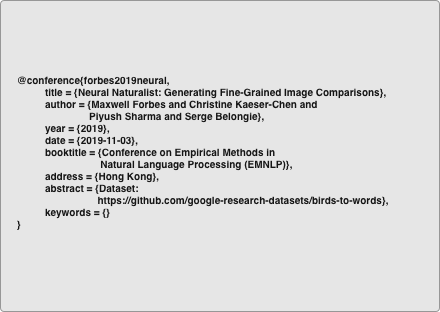

Context-aware Captions from Context-agnostic Supervision

Vedantam et al., 2017

Generating Visual Explanations

Hendricks et al., 2016

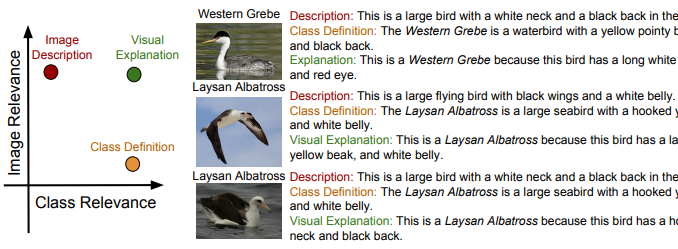

Learning to Describe Differences Between Pairs of Similar Images

Jhamtani and Berg-Kirkpatrick, 2018

Reasoning about Fine-grained Attribute Phrases using Reference Games

Su et al., 2017

Robust Change Captioning

Park et al., 2019

Expressing Visual Relationships via Language

Tan et al., 2019

emnlp 2019

emnlp 2019

birds-to-words

birds-to-words